The "Hallucination" Crisis (Why We Hit a Wall)

If you have used AI for business in the last three years, you have felt the frustration.

You ask ChatGPT to write a blog post, and it gives you three paragraphs of robotic fluff using words like "delve," "landscape," and "testament to." You ask an AI video generator for a clip of a person eating spaghetti, and it melts the fork into their hand.

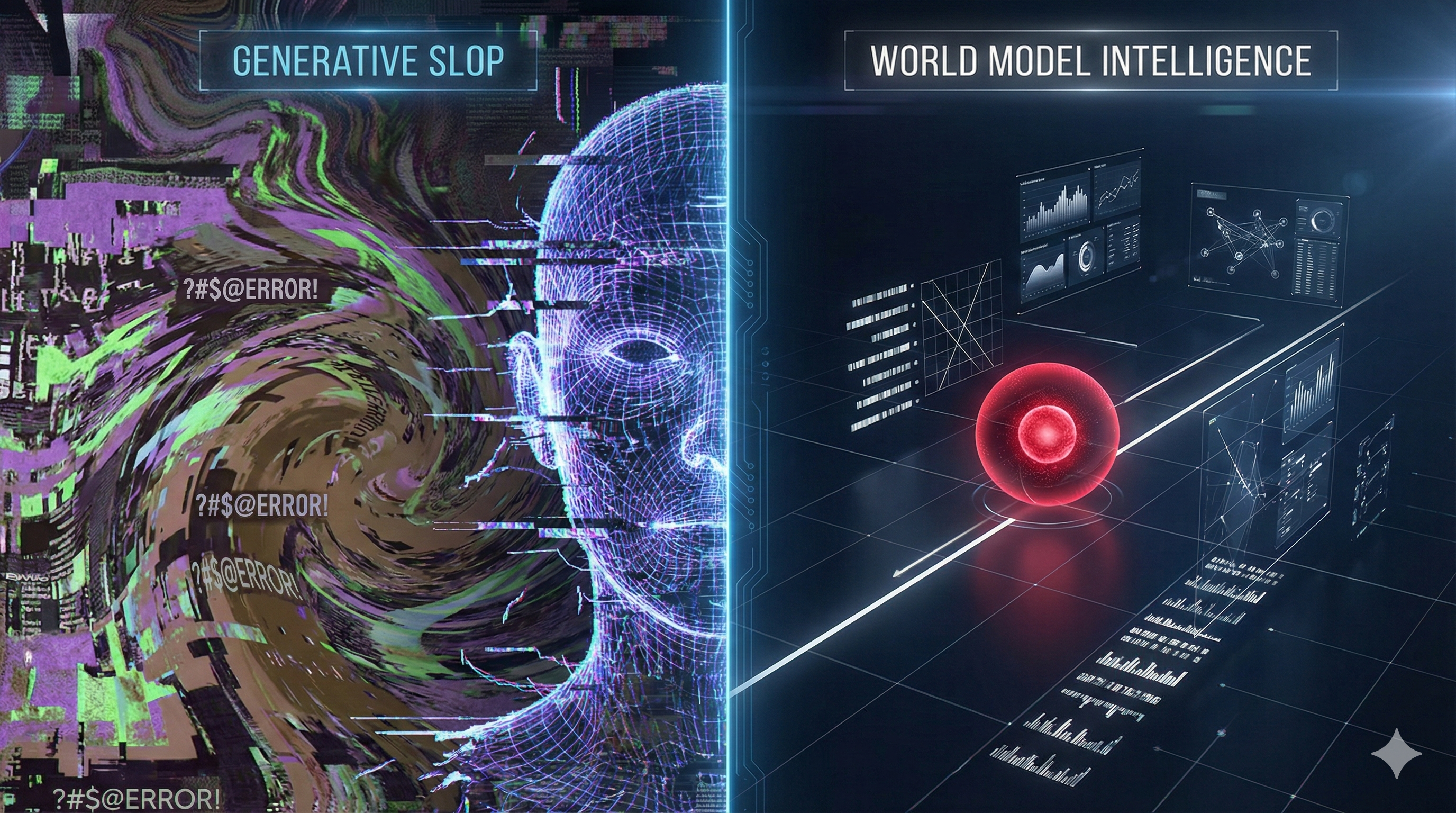

For a long time, we accepted this as the cost of doing business. We accepted that AI was just a "Probability Machine"—a tool that guesses the next word or pixel based on internet statistics.

The Hard Truth: Generative AI (LLMs) doesn't know what is true. It only knows what is likely. It doesn't understand physics. It doesn't understand cause and effect. It is a "Stochastic Parrot" repeating patterns without understanding meaning.

In late 2025, the cracks started to show. Brands stopped trusting AI content. Google started penalizing "AI Slop." The industry hit a wall.

But while the world was distracted by the latest chatbot update, a quiet revolution happened in the research labs. A new architecture emerged that doesn't just guess—it understands.

This is the story of World Models, and why the "Generative" era is officially over.

The "Red Dot" Test (Generative vs. Predictive)

To understand why EchoPulse pivoted our entire agency strategy to this new tech, you have to understand the "Red Dot" Test.

This is a famous experiment used by AI researchers to test Object Permanence—the ability to know that an object still exists even when you can't see it.

The Experiment

Researchers take a video of a person walking across a room. Then, they turn off the lights in the video, pitching the screen into total darkness. They ask the AI to track the person.

1. The Generative AI (The Old Way)

- How it works: It looks at pixels.

- The Result: When the lights go out, the pixels disappear. The AI "forgets" the person exists. If it tries to guess the next frame, it hallucinates—maybe generating a random object or losing the person entirely.

- Verdict: Failed. It has no mental model of the world.

2. The World Model / JEPA (The New Way)

- How it works: It looks at concepts (Embeddings).

- The Result: When the lights go out, the AI keeps a "Red Dot" locked exactly where the person should be. It calculates their speed and trajectory. It knows they are still there, moving in the dark.

- Verdict: Passed. It understands physics and reality.

The Tech: Introducing VL-JEPA

The technology behind this is called VL-JEPA (Vision-Language Joint Embedding Predictive Architecture).

Unlike ChatGPT, which tries to predict the next word, VL-JEPA predicts the next abstract state. It builds an internal simulation of the video before it ever outputs a result.

"Generative AI dreams. World Models think."

The "Post-Generative" Application (How We Use It)

So, we have an AI that understands physics and concepts. Great. How does that make you money?

At EchoPulse, we don't use this tech to write poetry. We use it to solve the three biggest problems in modern video marketing: Discovery, Safety, and Speed.

1. Semantic Search (Finding the Needle in the Haystack)

Most brands are sitting on a goldmine they can't touch. You have terabytes of Zoom calls, podcasts, and event footage. But if you want to find a specific clip, you are stuck.

- The Old Way (Keywords): You search for filenames like

final_v3_edit.mp4or generic tags like "meeting." If you search for "handshake" and the file isn't tagged "handshake," you find nothing. - The EchoPulse Way (VL-JEPA): We ingest your entire archive into a vector database. We can then ask the footage questions in plain English:

"Find the exact second where the CEO looks frustrated about interest rates." "Show me every clip where the product is used outdoors in low light."

- The Result: The AI "sees" the frustration. It "understands" low light. It retrieves the exact timestamp instantly, allowing us to repurpose old assets into new viral content in minutes, not days.

2. "Grounded" Brand Safety

The biggest risk for big brands is having their ad appear next to "unsafe" content. Text-based AI is terrible at spotting this.

- Scenario: A user comments, "This soda is the bomb!"

- Text AI (LLM): Flags it as "Violence" (bomb) or "Terrorism."

- World Model AI: Watches the video. It sees the user smiling and giving a thumbs up. It understands that "bomb" is slang for "awesome" in this context.

- The Benefit: We protect your brand from embarrassment without blocking legitimate engagement.

3. The "Director Agent" (Automated Editing)

The trend for 2026 isn't "Chatbots." It is Agentic AI. We don't just use AI to cut out silence (that's easy). We build "Director Agents" that understand narrative.

- How it works: The Agent watches a 2-hour webinar. It tracks the "Semantic Engagement"—when did the speaker get passionate? When did the audience laugh? It uses a World Model to identify the "climax" of the story.

- The Output: It autonomously edits a vertical short that starts with a hook and ends with a resolution—not because it guessed, but because it analyzed the emotional arc of the footage.

The SEO Revolution (Why "Grounded" Content Wins)

If you are still writing blog posts with standard ChatGPT, you are actively hurting your website's ranking.

Google's 2026 Algorithm Update declared war on "AI Slop." The search engine is now optimizing for Information Gain—rewarding content that provides new facts, data, or perspectives not found elsewhere.

The "Slop" Trap

- LLMs regurgitate the average of the internet. If 1,000 articles say "Content is King," the LLM will write "Content is King." It provides Zero Information Gain.

The EchoPulse Advantage: "Grounded Content"

When we write articles for our clients, we don't just prompt a text model. We feed a World Model actual video data, interviews, and proprietary datasets.

- The Process: The AI "watches" your product demo. It observes that the interface loads in 0.2 seconds.

- The Writing: It writes: "The interface loads in 0.2 seconds," instead of "The interface is fast."

- The Result: Concrete, data-rich content that Google loves because it is based on observed reality, not internet averages.

Pro Tip for CMOs: We also use VL-JEPA to generate Video Metadata. When we publish a video for you, we inject frame-by-frame semantic tags. This means if a user searches for a specific concept on Google, your video can show up indexed at the exact second that concept appears.

The Strategic Pitch (Why EchoPulse?)

The departure of Yann LeCun from the mainstream "Generative" race was not a retreat. It was an advance toward higher ground. By declaring LLMs a "dead end," he signaled the start of the Era of Physical Intelligence.

At EchoPulse, we heard the signal.

We made the hard choice to pivot. We ignored the hype of the "next GPT" and invested in the physics-based, rigorously tested power of World Models.

We are no longer just a "Creative Agency." We are a Signal Processing Firm.

We don't just "make content."

- We archive your reality.

- We protect your brand with physics-based safety.

- We engineer your growth with predictive intelligence.

The era of "Generative Guessing" is over. The era of Understanding has begun.

Are you ready to stop generating slop and start engineering truth?