Introduction: Editing is Not Art. It is Math.

If you think video editing is about "making things look pretty," you are stuck in 2015. In 2025, video editing is about Velocity.

The algorithm does not have eyes. It cannot appreciate your color grading or your cinematic lighting. It only measures data points:

- Did the viewer stop scrolling?

- Did they watch past the 3-second mark?

- Did they watch until the end?

- Did they re-watch it?

As an agency, we don't hire "Video Editors." We hire Retention Engineers. Their job is not to win an Oscar. Their job is to prevent the viewer's thumb from moving for 60 seconds.

This guide reveals the exact Frame-by-Frame Editing Protocol we use to generate millions of views. We are opening the black box of our post-production process.

Chapter 1: The "Zero Dead Air" Policy (Auditory Pacing)

The first step in our editing workflow is "The Tighten." Human speech is full of inefficiencies. We take breaths. We say "Um." We pause to think. In real life, this is natural. On TikTok, it is fatal.

The "J-Cut" Technique Amateur editors cut audio and video at the same time. This creates a choppy, robotic feel. We use J-Cuts.

- The Logic: You hear the audio of the next clip before you see the video of it.

- The Effect: It drags the viewer's brain forward. By the time they see the new scene, they are already listening to it. It creates a seamless stream of consciousness.

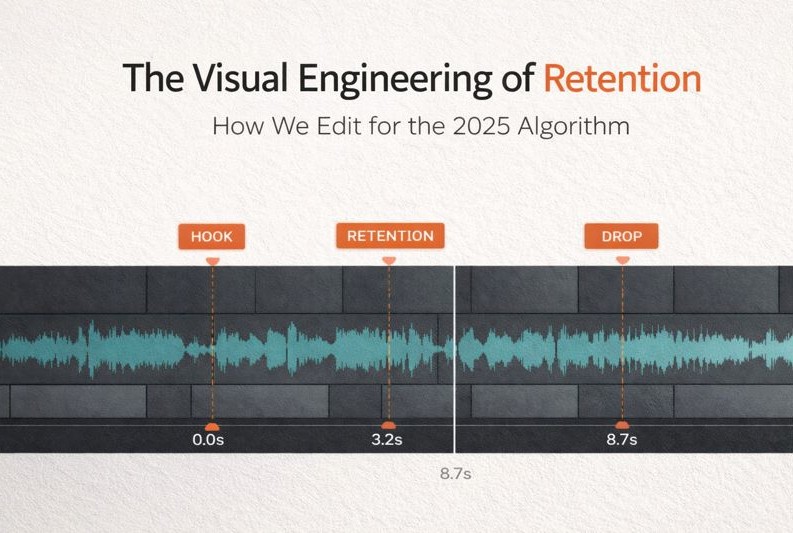

The "Millisecond" Gap We remove every single frame of silence. If there is a pause between sentences longer than 0.2 seconds, it gets cut.

- Why? The modern brain processes information faster than you can speak. If you pause, the viewer's dopamine drops, and they scroll. We keep the audio waveform looking like a solid block of brick—relentless and dense.

Chapter 2: Visual Dynamics (Controlling the Eye)

Once the audio is tight, we must address the eyes. Rule #1 of The Agency: The screen must never be static.

If you are a "Talking Head" standing in the center of the frame for 10 seconds, you are boring. We use Artificial Camera Movement to simulate a multi-camera studio setup, even if you filmed it on one iPhone.

1. The "Scale Pulse" (The 2.5s Rule) Every 2 to 4 seconds, we change the scale of the video clip.

- 0:00 - 0:04: Scale 100% (Medium Shot).

- 0:04 - 0:08: Scale 115% (Close Up).

- 0:08 - 0:12: Scale 100% (Medium Shot).

- The Subconscious Effect: This mimics the human eye "leaning in" to pay attention. It resets the viewer's visual attention span without them realizing it.

2. The "Punch In" Emphasis When you deliver the Key Insight or the Punchline, we "Punch In" aggressively.

- Speaker: "The secret to wealth..." (Scale 100%)

- Speaker: "...is Leverage." (Hard Cut to Scale 130%)

- Why? It tells the viewer: "This specific word is important. Pay attention."

3. The "Slow Zoom" (The Ken Burns Effect) During a serious or emotional story, we apply a continuous, subtle zoom (from 100% to 110% over 5 seconds).

- The Vibe: It creates intensity and intimacy. It draws the viewer into the screen.

Chapter 3: The B-Roll Strategy (Contextual Visualization)

You cannot be the only thing on the screen. You must use B-Roll (secondary footage) to visualize what you are saying. But not all B-Roll is created equal.

The "Stock Footage" Trap Most editors go to Pexels or generic stock sites and download a clip of "Business man shaking hands." Do not do this. It looks cheap. It looks like a commercial. Gen Z can smell stock footage from a mile away, and they hate it.

The "Native" B-Roll Strategy We use three types of visuals that convert:

- Screen Recordings: If you are talking about a website, show the website. If you are talking about revenue, show the Stripe dashboard. It proves you aren't lying.

- Self-Shot B-Roll: We ask our clients to film 5 minutes of themselves "working"—typing, walking, drinking coffee, pointing at a whiteboard. Using your own footage builds massive trust because it is authentic.

- Pop Culture References: (Use with caution). Using a quick clip from The Wolf of Wall Street or The Office can add humor and relatability, acting as a "Pattern Interrupt."

Chapter 4: The Caption Architecture (Kinetic Typography)

Captions are no longer for accessibility. They are for Retention. 80% of people watch videos with sound off, but even those with sound read along. We don't use standard subtitles. We use Kinetic Typography.

1. The "Karaoke" Style We highlight the specific word being spoken in a different color (usually brand yellow or green).

- Why? It forces the eye to track the text from left to right, locking the viewer into the video.

2. The "Keyword" Pop We don't just caption; we emphasize.

- Spoken: "You need to make more money."

- Visual: The word MONEY appears in a larger font, bold, green, with a "Pop" sound effect and a screen shake.

- The Goal: Even if the viewer is skimming, they see the keywords and understand the topic.

3. Positioning Matters Never put captions at the very bottom (blocked by the caption/comment UI) or the very top (blocked by the 'Following' tabs).

- The Safe Zone: The middle-lower third of the screen is prime real estate. We keep it clean and legible.

Chapter 5: Advanced Sound Design (The Invisible Glue)

If you watch a horror movie on mute, it isn’t scary. If you watch a comedy on mute, it isn’t funny. Audio dictates emotion.

Most editors slap a trending song on the video and call it a day. That is lazy. At EchoPulse, we use a technique called "Audio Texturing." We treat the soundscape like a musical composition.

1. The "Swoosh" and "Pop" Protocol Your eyes and ears must be synchronized. If something moves on screen, it must make a sound.

- Transition? Needs a "Whoosh" or "Whip" sound.

- Text appears? Needs a "Pop," "Click," or "Typewriter" sound.

- Why? This provides a dopamine hit. It confirms to the brain that the visual change actually happened. It makes the video feel tactile and expensive.

2. The "Riser" (Building Anticipation) Before you reveal a big secret or a shocking statistic, we use a Riser.

- What is it? A sound effect that slowly increases in pitch and volume (like a reverse cymbal).

- The Effect: It subconsciously tells the viewer: "Pay attention, something huge is coming." It creates tension that must be resolved by keeping their eyes on the screen.

3. Music Ducking (The "Breath" of the Edit) Background music should never fight with your voice. It should dance with it.

- The Technique: We use "Auto-Ducking." When you speak, the music drops to -20dB. When you pause for effect, the music swells back up to -5dB.

- The Result: The video feels rhythmic. The music fills the emotional gaps, making your points hit harder.

Chapter 6: Color Grading (The "EchoPulse" Polish)

Raw iPhone footage looks "digital." It looks like a phone video. We want your content to look like a Netflix Documentary.

We don't just slap an Instagram filter on it. We use professional Color Grading (Lumetri Color in Premiere Pro or DaVinci Resolve) to fix the "Chemistry" of the image.

1. Skin Tone Protection The algorithm hates bad skin tones. If you look pale, sickly, or too red, the video looks low quality. We isolate skin tones and push them towards "Teal and Orange" contrast. This makes you pop off the background.

2. Vibrancy vs. Saturation Social media is a battle for attention. Dull colors get ignored.

- We boost Vibrancy (which boosts the muted colors) rather than just Saturation (which makes you look like an Oompa Loompa).

- Goal: The video should look 10% more colorful than real life. This stops the scroll.

Chapter 7: The Export Protocol (Don't Trip at the Finish Line)

You can edit a masterpiece, but if you export it wrong, Instagram and TikTok will destroy it. These platforms have aggressive "Compression Algorithms." If your file is too big, they crush it, and your video comes out pixelated and blurry.

The EchoPulse Export Settings (2025 Standard): Don't guess. Use these exact settings.

- Resolution: 1080x1920 (Vertical).

- Controversial Truth: Do NOT upload 4K to TikTok/Reels. The platform will compress it down to 1080p anyway, and their compressor is worse than ours. We upload 1080p so we control the quality.

- Frame Rate: 30fps (Frames Per Second).

- Note: Movies are 24fps. TV is 30fps. If we use slow motion, we shoot at 60fps.

- Bitrate: 15-20 Mbps (VBR 1 Pass).

- Why: High enough for quality, low enough to avoid the "compression hammer."

- Codec: H.264 (Mp4).

- Note: It is the universal language of the internet.

Conclusion: Stop "Editing." Start Engineering.

If this sounds like a lot of work for a 60-second video... it is. A single high-performing reel at EchoPulse has:

- 40+ Jump Cuts.

- 20+ Sound Effects.

- 15+ Visual Zoom/Scale changes.

- Custom Color Grading.

- Kinetic Typography.

This is why DIY fails. You are a CEO. You are a Founder. You should be closing deals and leading your team, not hunting for "whoosh" sound effects at 2:00 AM.

You provide the Message. EchoPulse provides the Engineering. We take your raw thoughts and turn them into algorithmic gold.